Automatic cartography with deep learning

In this post we talk about how to build a deep-learning-powered tool to find buildings in photos taken from airplanes automatically. A collaboration with Geoin!

These are the results!

But how is it done?

The problem is simple: find all the buildings in photos taken from airplanes. The solution? Kind of. It’s made of two steps: first, we find buildings at a pixel level and then we group pixels to find individual buildings.

Phase 1: classifying the pixels

Segmentation of pixels in photos is one of the tasks that neural networks excel at. So, of course, we build one deep learning model to perform semantic segmentation of pixels. The model is trained on a corpus of thousands of hand-labeled aerial images, like the one below. The output (like the ground-truth) is a binary image indicating where buildings are.

example of a photo taken from an airplane

corresponding ground-truth label

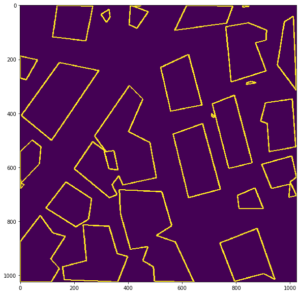

Phase 2: finding buildings as polylines

Next we need to separate the buildings. We can easily recover the buildings, as a set of polylines (a list of the building vertexes), by finding the contours of the segmentation produced by the net. We can efficiently find the contours with standard vision algorithms (since we work on binary maps). Here is an example.

aerial image

with-output of the neural network

polylines recovered from the contours

That’s All! Buildings are ready to be imported into your favorite GIS software.

Stay tuned to flair-tech.com for more blog posts about our active projects.